Generative AI: a new weapon for cyber-attackers or an asset for organisational cybersecurity?

Generative AI is the focus of the news and has entered our daily lives, especially after the appearance of ChatGPT. Generating both fear and enthusiasm, it is already being used in a wide range of sectors: healthcare (faster diagnosis, personalised treatment plans, etc.), education (creation of personalised learning plans, etc.), software development (easier debugging, generation and production of code snippets), etc.

But what do we mean by ‘generative AI’? The Canadian Cyber Security Centre provide this definition: “Generative AI is a type of artificial intelligence that creates new content by modelling the characteristics of data from the large data sets that feed the model. While traditional AI systems can identify patterns or classify existing content, generative AI can create new content in many forms, such as text, an image, an audio file, or software code“.

Cyber attackers did not have to wait long to use generative AI to their advantage: many intrusion sets quickly adopted this tool to compromise their targets. However, they are not the only ones to have understood the benefits of this technology. Cybersecurity professionals have also embraced it to help organisations better protect themselves and strengthen their resilience. How is generative AI being used by cyber attackers and defenders? This article takes a look at how this much-hyped tool is being used.

Generative AI, an offensive tool for cyber attackers

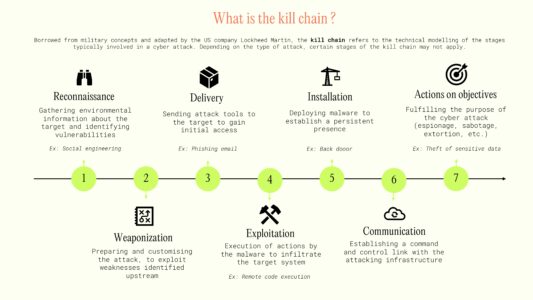

AI began to be used by cyberattackers in 2020, at the same time as the democratisation of generative AI. Thanks to its capabilities, it can be used at all stages of the kill chain – a model commonly used to represent the stages and modus operandi implemented during a cyber attack.

Reconnaissance & delivery : At this stage, cyber attackers often use social engineering to identify valuable targets or obtain key information to compromise them. Generative AI can be used to carry out phishing operations, in particular to send mass emails that are convincing and tailored to the victims. This technology thus enables the convergence of two approaches that were previously exclusive: massive phishing, with a low conversion rate but a large number of targets, and targeted phishing (or spearphishing), with a high conversion rate but a small number of targets. These campaigns may include audio or deepfakes. A recent example: in January 2023, an employee in the finance department of a multinational company in Hong Kong was tricked by attackers into transferring more than $25 million to cybercriminals using a fake video conference with deepfakes of authority figures in his company.

Generative AI can also be used to create fake social network accounts, fake websites and credible blog posts. For cyber attackers, this is an opportunity to improve the dissemination of false information with the aim of destabilising a company or state. In the current conflict between Israel and Hamas, Iran is using extensive influence campaigns to discredit the Israeli government and its military operations, as shown in the Microsoft Security Insider article. A large network of ‘sockpuppets’ (social network accounts that use a fake or stolen identity to spread false information) has been deployed, using generative AI to produce and distribute pro-Hamas content by posing as Israeli internet users.

Weaponization : Generative AI can be used to enhance malware, increasing its sophistication and performance. For example, it could automatically adapt to and blend in with natural network traffic, making it harder to detect. And for cybercriminals with little technical knowledge, it lowers the barrier to entry needed to create malware. One example is WormGPT, a generative AI tool that can be used to create sophisticated phishing attacks or compromised professional emails.

Installation & parallelisation : Generative AIs have interesting scripting capabilities to make certain operations easier or even more dynamic, such as listing the processes running on a machine, adapting artefacts to context, or even finding potential propagation paths from an infected machine.

Command & Control : Communication between the malware and its operator could be made more stealthy through generative AI, capable of designing ad hoc requests and limiting pattern recognition. This could be a significant gain in stealth, especially when combined with the ability to mimic standard network activity as seen above.

Actions on objectives : The capabilities of generative AI can enable malwares to self-select the valuable data to exfiltrate or encrypt, limiting network flows or suspicious operations and making detection more complex. Once the data is exfiltrated, cyber attackers save time by being able to analyse it quickly, at scale and in multiple languages, which previously required specific skills or even dedicated teams.

In addition, generative AI can be used as an attack vector, particularly for cyber espionage. As a result of the efficiency gains it brings, AI has been integrated by companies into their processes and is now used by many professionals. While companies can integrate proprietary AI systems based on pre-trained models, they typically opt for public AI models such as ChatGPT. This can be a source of vulnerability if the processing servers are compromised. Conversations between employees and the AI can be recovered by malicious actors because of the information they are likely to contain: internal source code, confidential commercial or financial information, information used for authentication on internal systems, etc.

Generative AI, an asset for improving corporate cybersecurity

Fortunately, cyber attackers do not have a monopoly on using AI. Its power can also be used to counter the cyber threat. Companies and government organisations, such as the NSA, are already using it. The impact of AI on the threat will be balanced by its use to improve security and resilience.

Generative AI is now widely used in education, and can be an effective solution for providing personalised cybersecurity training to improve employees’ practices in this area. In doing so, they help prevent cyber risks within organisations.

Generative AI can be used primarily to avoid risk. In particular, it can be used to analyse the attack surface, especially the human attack surface, to understand where the potential weaknesses are and to correct them more effectively.

By automating certain tasks, AI can reduce the workload of CISOs and cybersecurity professionals. This does not mean that it will replace humans, but rather that it will support them so that they can focus their efforts on other tasks to improve security.

It can be used to simulate crises, to detect phishing based on the content of messages and web pages, to process and to prioritise alerts by limiting false positives, to simplify incident reporting and remediation, etc. In addition, generative AI can help prepare for cyber-attacks by being used during training sessions to dynamically generate realistic situations.

In the event of a cyber attack, generative AI can help improve crisis communications. At a time when reaction time, control of language and management of emotions are critical, AI can help teams formulate clear messages quickly and be better organised to restore the proper functioning of the information system.

In some cases, AI can also be used to share cyber risk through better insurance coverage. It can be used to automatically model insurance policies and risk-sharing terms, allowing organisations to analyse their cover and make the best decisions. The capabilities of generative AI could also make it possible to generate personalised general and specific insurance conditions based on advanced risk analysis, as suggested in the recent Enisa report.

Generative AI was democratised in 2023 with the launch of ChatGPT. Of course, its capabilities have seduced cyber attackers, but it still represents a significant advance in improving cybersecurity. However, it will not replace professionals in this field, but will be able to play a supporting role, particularly in detecting cyber threats or making decisions regarding the security of an organisation’s information.